Yes, build a better emulator and the world will beat a path to your door to run their old brown

x86 binaries. Right now that emulator is QEMU. Even if you

run Hangover for Windows binaries, it's still QEMU underneath (and Hangover only works with

4K page kernels currently, leaving us stock Fedora

ppc64le users out), and if you want to run Linux

x86 or

x86_64 binaries on your OpenPOWER box, it's going to be QEMU in user mode for sure.

However, one of the downers of this approach is that you also need system libraries. Hangover embeds Wine to solve this problem (and builds them natively for ppc64le to boot), but QEMU user mode needs the actual shared libraries themselves for the target architecture. This often involves labouriously copying them from foreign architecture packages and can be a slow process of trying and failing to acquire them all, and you get to do it all over again when you upgrade. Instead, just use a live CD/DVD as your library source: you can keep everything in one place (often using less space), and upgrading becomes merely a matter of downloading a new live image.

My real-world use for this is running the old brown Palm OS Emulator, which I've been playing with for retrocomputing purposes. Although the emulator source code is available, it's heavily 32-bit and I've had to make some really scary hacks to the files; I'm not sure I'll ever get it compiling on 64-bit Linux. But there is a pre-built 32-bit i386 binary. I've got a Palm m515 ROM, a death wish and too little to do after work. Let's boot this sucker up. Note that in these examples I'm "still" using QEMU 5.2.0. 6.1.0 had various problems and crashed at one point which I haven't investigated in detail. You might consider building QEMU 5.2.0 in a separate standalone directory (plus-minus juicing it) for this purpose.

We'll use the Debian live CD in this article, though any suitable live distro should do. Since POSE is i386, we'll need that particular architecture image. Download it and mount the ISO (which appears as d-live 11.0.0 gn i386 as of this writing).

The actual filesystem during normal operation is a squashfs image in the live directory. You can mount this with mount, but I use squashfuse for convenience. Similarly, while you could mount the ISO itself every time you need to do this, I just copy the squashfs image out and save a couple hundred megabytes. Then, from where you put it, make sure you have an ~/mnt folder (mkdir ~/mnt), and then: squashfuse debian-11-i386.squashfs ~/mnt

Let's test it on Captain Solo. After all, we've just mounted a squashfs image with a whole mess of alien binaries, so:

% ~/src/qemu-5.2.0/build/qemu-i386 -L ~/mnt ~/mnt/bin/uname -m

i686

And now we can return Luke Skywalker to the Emperor: ~/src/qemu-5.2.0/build/qemu-i386 -L ~/mnt pose

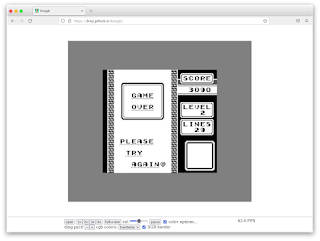

Here it is, running a Palm image using an m515 ROM I copied over from my Mac.

However,

uname and

pose are both single binaries each in a single place. Let's pick a more complex example with resources, assets and other loadable components like a game. I happen to be a fan of the old Monolith anime-style shooter

Shogo: Mobile Armor Division, which originated on Windows (GOG still sells it) but was also ported to the classic Mac OS and Linux by Hyperion. (The soundtrack CD is wonderful.) I own a boxed physical copy not only of the Windows release but also the Mac version, which is quite hard to find, and the retail Linux version is reportedly even rarer. While there have been

promising recent developments with open-source versions of the LithTech engine, Shogo was the first LithTech game and apparently used a very old version which doesn't yet function. There is, however,

a widely available Linux demo.

The demo which you download from there appears to just be a large i386 binary. But if you run it using the method above, you'll only get a weird error trying to run another binary from a temporary mount point. That's because it's actually an ISO image with an i386 ELF mounter in the header, so rename it to shogo.iso and mount it yourself. On my system GNOME puts it in /run/user/spectre/ISOIMAGE.

To set options before bringing up the main game, Shogo uses a custom launcher (on all platforms), but you can't just run it directly because Debian doesn't have all the libraries the launcher wants:

% ~/src/qemu-5.2.0/build/qemu-i386 -L ~/mnt /run/media/spectre/ISOIMAGE/shogolauncher

/run/media/spectre/ISOIMAGE/shogolauncher: error while loading shared libraries: libgtk-1.2.so.0: cannot open shared object file: No such file or directory

You could try to scare up a copy of that impossibly old version of GTK, but in the Loki_Compat directory of the Shogo ISO is the desired shared object already. (Not Loki Entertainment: this Loki, a former Monolith employee.) You can't give qemu-i386 multiple -L options, but you can give environment variables to its ELF loader, so we'll just specify a custom LD_LIBRARY_PATH. For the next couple steps it will be necessary for us to actually be in the Shogo mounted image so it can find all of its data files, thusly:

% cd /run/media/spectre/ISOIMAGE

% ~/src/qemu-5.2.0/build/qemu-i386 -L ~/mnt -E LD_LIBRARY_PATH="/run/media/spectre/ISOIMAGE/Loki_Compat" ./shogolauncher

We've bypassed the shell script that actually handles the entire startup process, so when you select your options, instead of starting the game it will dump a command line to execute to the screen. This is convenient! To start out with, I picked a windowed 640x480 resolution using the software renderer and disabled sound (it doesn't work anyway, probably due to the age of the libraries it was developed with), got the command line and ran that through QEMU. Boom:

And, as long as you crank the detail level down to low from the main menu, it's playable!

A lot doesn't work: it doesn't save games because you're running it out of an ISO (copy it elsewhere if you want to); there is no sound, probably, as stated, due to the age of the libraries (the game itself dates to 1998 and the Linux port to 2001); and don't even think about trying to launch it using OpenGL (it bombs out with errors). There are also occasional graphics glitches and clipping problems, one of which makes it impossible to complete the level, though I don't know how much of this was their bug versus QEMU's bug.

Performance isn't revolutionary, either for POSE or for Shogo. However, keep in mind that all the system libraries are also running under emulation (only syscalls are native), and with Shogo in particular we've hobbled it even further by making the game render everything entirely in software. With that in mind, the fact the framerate is decent enough to actually play it is really rather remarkable. Moreover, I can certainly test things in POSE without much fuss and it's a lot more convenient than firing up a Mac OS 9 instance to run POSE there.

Best of all, when you're done running alien inferior binaries, just umount ~/mnt and it all goes away. When Debian 12 appears, just replace the squashfs image. Easy as pie! A much more straightforward way to run these sorts of programs when you need to.

A footnote: in an earlier article we discussed HQEMU. This was a heavily modified fork of QEMU that uses LLVM to recompile code on the fly for substantially faster speeds at the occasional cost of stability. Unfortunately it has not received further updates in several years and even after I hacked it to build again on Fedora 34, even with the pre-built LLVM 6 with which it is known to work, it simply hangs. Like I said, for now it's stock QEMU or bust.